tl/dr;

Spec-driven development starts by writing precise specifications before any coding. Using OpenSpec, each feature of Slop Detector was defined through short documents that explained the problem, goal, and structure. This approach reduced context load for coding tools like Claude and Codex while keeping each task traceable. Slop Detector runs on Cloudflare Workers and checks text for AI-style phrasing with 45 regex patterns. It reports which expressions appear and assigns a confidence score indicating the likelihood of synthetic writing. By establishing specifications first, the process keeps code consistent with intent and limits unnecessary improvisation during development.

Spec-Driven Development

Instead of just diving into vibe-coding right away, I used OpenSpec to create specification documentation outlining the main application features (under /openspec/specs/) of Slop Detector, as well as individual feature additions (under /openspec/changes/). These documents detailed the problems, the goals and the architecture. Before writing any code, everything is laid out clearly, with the AI agent acting as a BA (Business Analyst) first, and then as a developer second.

I had played with GitHub’s Spec-Kit a bit, but found it too expansive, too much documentation, which makes development slower, and more expensive, as more tokens are used up in the process. OpenSpec is lightweight, and focused on generating just enough documentation to guide the AI agent without overwhelming it (or rather, filling up its context).

The Slop Detector came to life through this process. It’s an AI tool that can detect synthetic writing by analysing the text for linguistic and stylistic signals, through a pattern-based detection approach: I curated 45 regex patterns that cover AI phrases, business jargon, and rhetorical constructions. Based on this Wikipedia Project Page and using ChatGPT to identify words, bigrams and trigrams that are common in (English) AI-generated text.

Using Claude, and Codex when I ran out of context on my Claude plan (yes, even with OpenSpec’s shorter instructions), the coding agent would generate draft specifications based on my short functional description, followed by my review of those specifications, followed by the implementation request.

An example of a simple feature requirement created for the Slop Detector for OpenSpec to generate docs around (in Claude):

/openspec:proposal add a clear button that clears the text field. Only enable the clear button when there is text in the field.

OpenSpec would generate three files for this feature change:

- a spec.md

- a proposal.md

- a tasks.md

Not too much to review and adjust, but enough to guide the AI coding.

Once I reviewed and finalised the documentation, I would then request implementation:

/openspec:apply add-text-clear-button

And once implemented and tested, it’s time to archive the specs:

/openspec:archive add-text-clear-button

Each openspec step involves background actions the AI agent walks through.

Note: use different syntax /openspec-proposal, /openspec-apply, and /openspec-archive commands in Codex.

Implementation

The current version of Slop Detector is quite lightweight, running entirely on Cloudflare Workers, which means it can process requests in under 50 ms. It doesn’t require any AI bits, and doesn’t store any data. Users can simply paste text or upload .txt, .md, or .html files, and the Slop Detector will generate a transparent report listing which patterns were triggered and the confidence score (of it being AI slop).

Spec-driven development really helped in maintaining clarity throughout the vibe-coding process. Every file, test, and implementation task was guided by the corresponding section in the specification doc, so design and implementation were always in sync. This resulted in a reproducible development method where AI tools operate within predefined boundaries rather than the AI agent improvising (too much).

By codifying our expectations first and generating code second, it demonstrates how specification-first workflows can align human intent with AI assistance to produce verifiable software. I was put off by Spec-Kit’s heavy documentation approach, but OpenSpec struck the right balance for this project.

The source code and OpenSpec documentation for Slop Detector is available on GitHub. Check it out!

It also comes with a AI Writing Style Guide to provide as context when prompting an AI chatbot. It outlines best practices for writing content that avoids AI slop characteristics to begin with.

Notes

-

OpenSpec could do with a constitution file, outlining overall, non-negotiable governing principles and development guidelines for the project. Although we still have the AGENTS.md file too.

-

Manual changes sometimes get overwritten by the AI agent during code updates, so it’s best to keep manual code edits to a minimum, or make sure you update the spec document with the same changes. Examples: title changes, addition of tabIndex on buttons.

-

Pain point for the “light” approach here is that the Slop Detector can’t differentiate between AI-generated text and academic writing (like these), which often shares similar characteristics, and on which AI models are trained on. Still a helpful editorial tool for spotting potential AI slop in marketing copy, blog posts, and business writing.

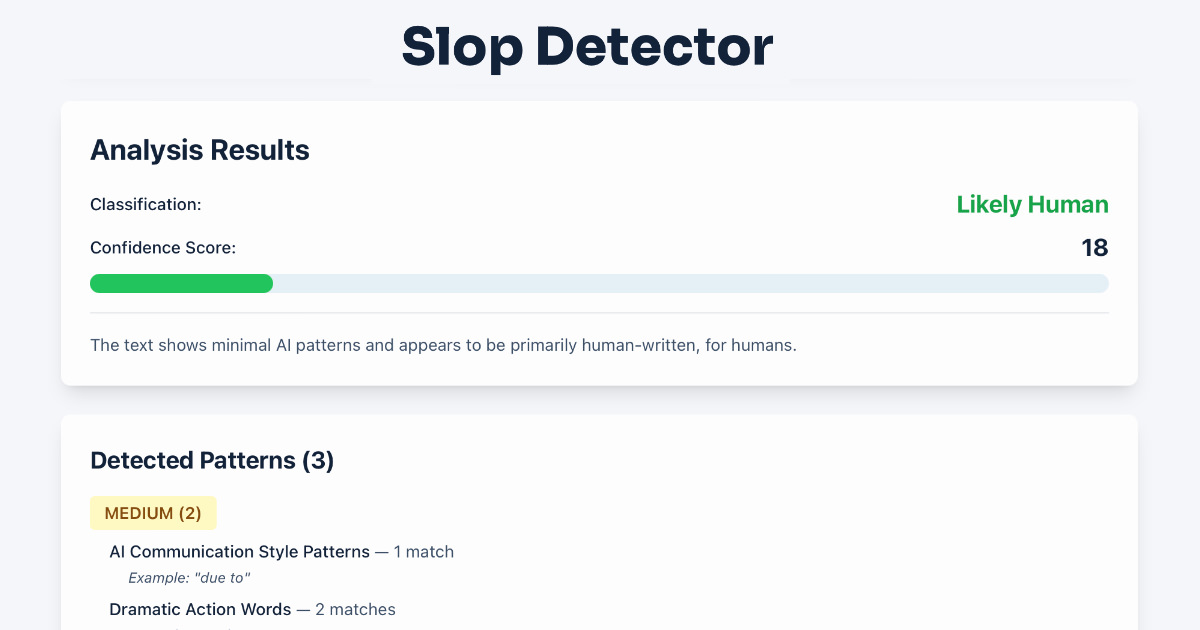

Generated report for an earlier version of this post (since then score has gone up). Probably should rewrite this with less repetition of “implementation” though :)

Slop Detection Analysis Report

Summary

- Classification: Likely Human

- Confidence Score: 14

- Patterns Detected: 3

Explanation

The text shows minimal AI patterns and appears to be primarily human-written, for humans.

Patterns

Medium Patterns

- Dramatic Action Words (count: 1)

- Match:

enable

Context: …d a clear button that clears the text field. Only enable the clear button when there is text in the field….

- Match:

Very Low Patterns

- AI-Favored Nouns (count: 8)

- Match:

implementation

Context: …oo expansive, too much documentation, which makes implementation slower, and more expensive, as more tokens are use… - Match:

implementation

Context: …erating just enough documentation to guide the AI implementation without overwhelming it. The [Slop Detector]… - Match:

implementation

Context: …y review of those specifications, followed by the implementation request. An example of specifications created for… - Match:

implementation.

Context: … to review and adjust, but enough to guide the AI implementation. Once I reviewed and finalised the documentation,… - Match:

implementation:

Context: …finalised the documentation, I would then request implementation: - /openspec-apply add-text-clear-button And once… - Match:

implementation

Context: …enspec-archive add-text-clear-button The current implementation of Slop Detector is quite lightweight, running ent… - Match:

implementation

Context: …ut the vibe-coding process. Every file, test, and implementation was based on a corresponding section in the specif… - Match:

implementation

Context: …g section in the specification doc, so design and implementation were always in sync. This resulted in a reproducib…

- Match:

- AI-Favored Verbs (count: 1)

- Match:

align

Context: …, we showed how specification-first workflows can align human intent with AI assistance to produce verifia…

- Match:

Metadata

- Character Count: 3,076

- Word Count: 446

- Pattern Engine Version: 1.4.0

- Analysis Duration: 0ms

- Timestamp: 2025-10-17T08:35:04.252Z

- Submission Source: Direct Text Input

Warnings

None.