Creating an MCP Server for Your Hugo Blog: Making Your Content AI-Accessible

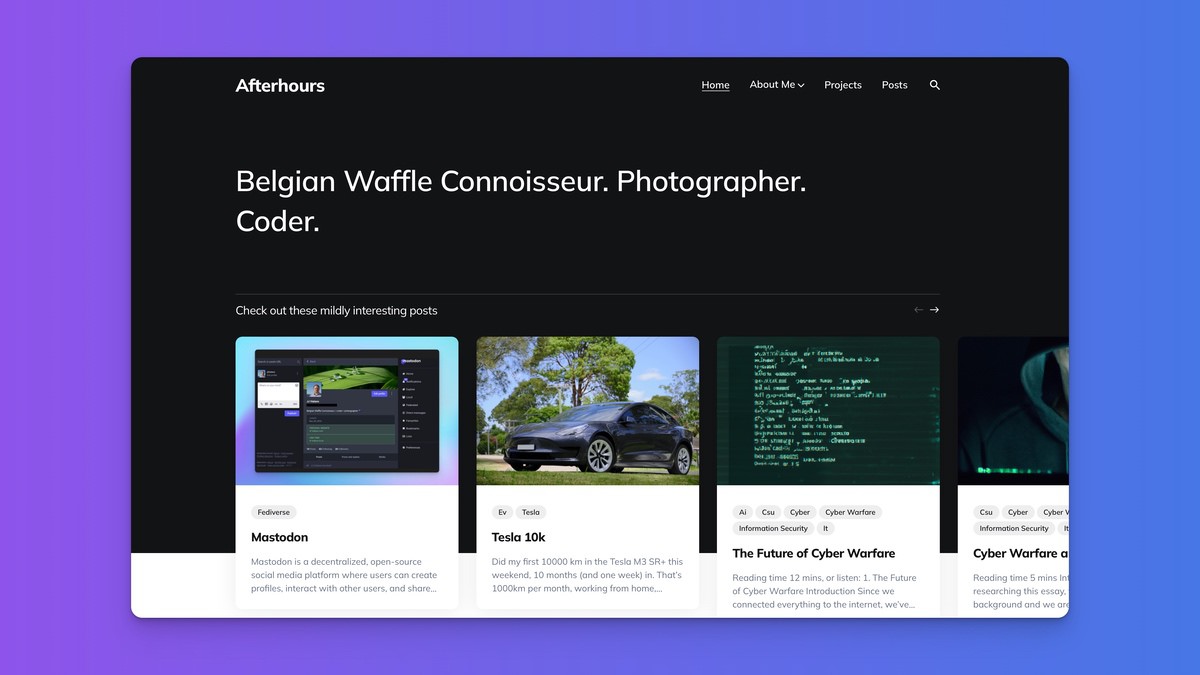

Modern AI assistants like Claude are becoming increasingly powerful at helping with research, writing, and problem-solving. But what if you let AI assistants directly access and search through your blog’s content? That’s what I tried to accomplish by implementing a Model Context Protocol (MCP) server for my Hugo-powered blog.

Of course, AI assistants can browse the web, but having a dedicated MCP server for your blog allows for more efficient and targeted queries.

What is Hugo?

Hugo is a static site generator, built in Go. Unlike traditional content management systems that generate pages dynamically on each request and use a database, Hugo pre-builds your entire website into static HTML, CSS, and JavaScript files that can be served from any web server or CDN.

Hugo excels as a blogging platform because it:

- Builds incredibly fast: Hugo can generate thousands of pages in seconds

- Requires no database: Content is stored in Markdown files with front matter

- Offers flexible templating: The Go template system allows complex content organization

- Supports multiple content types: Posts, pages, taxonomies, and custom content types

- Handles assets intelligently: Built-in image processing, CSS/JS bundling, and more

- Provides excellent SEO: Clean URLs, fast loading times, and semantic HTML output

- Is more secure: No database or server-side code to exploit

What’s not to like?

(I moved from WordPress to Hugo in 2023, and I haven’t looked back since.)

Hugo’s Content Structure

A typical Hugo site organizes content like this:

content/posts/- Your blog posts in Markdownlayouts/- HTML templates that define how content is renderedstatic/- Images, CSS, JavaScript, and other static assets like .well-knownconfig.toml- Site configuration and settings

This structure makes Hugo perfect for our MCP integration because we can easily extend the build process to generate additional content (llms.txt, markdown files) alongside the main HTML.

What is MCP and Why Should You Care?

Model Context Protocol (MCP) is an open standard/protocol (by Anthropic) that enables AI applications to connect to external data sources and tools. Think of it as a standardized way for AI assistants to “plug into” your services and data.

The MCP ecosystem consists of three main components:

MCP Servers

These are lightweight programs that expose data and functionality to AI assistants. They can provide:

- Tools: Interactive functions the AI can call (like search or content analysis)

- Resources: Static or dynamic content (like your blog posts)

- Prompts: Reusable prompt templates

MCP Clients

These are AI applications that connect to MCP servers. Claude Desktop, for example, is an MCP client that can connect to multiple MCP servers simultaneously, giving it access to your local files, databases, APIs, and more.

The Protocol Itself

MCP uses JSON-RPC 2.0 over various transports (stdio, SSE) to enable secure, standardized communication between clients and servers.

For our Hugo blog, we’ll be creating an MCP server that exposes our blog content as a searchable resource (through a llms-full.txt), allowing AI assistants to find and reference our content when helping with queries.

Generating llms.txt Files with Hugo

The first step is making our blog content available in a format that’s easy for our MCP server to consume. We’ll generate two files:

llms.txt: A lightweight markdown index of our contentllms-full.txt: Complete markdown content for full-text search

See also https://llmstxt.org for the standard.

Hugo Template Setup

The beauty of Hugo’s templating system is that we can create custom output formats alongside our regular HTML pages. We’ll set up templates and configuration to generate both a lightweight content index (llms.txt) and a comprehensive full-text version (llms-full.txt) of our blog content, as well as individual markdown files for each post. (these links contain great how-tos)

The templates use Hugo’s built-in Go-based functions to iterate through all published pages, extract metadata like titles and dates, and format the content in a structure that’s easy for our MCP server to parse and search.

When you run hugo build, these templates will generate /llms.txt and /llms-full.txt files containing your blog content in a search-friendly format. Whenever a new post is published, these files will be updated automatically.

Building the Cloudflare Worker MCP Server

Now for the exciting part - creating an MCP server that can search through our blog content, vibe-coded with the help of Claude Code. We’ll deploy this as a Cloudflare Worker for global availability and fast response times.

MCP Server Implementation

Our MCP server is implemented as a Cloudflare Worker for global availability and fast response times. The server handles the full MCP protocol, including:

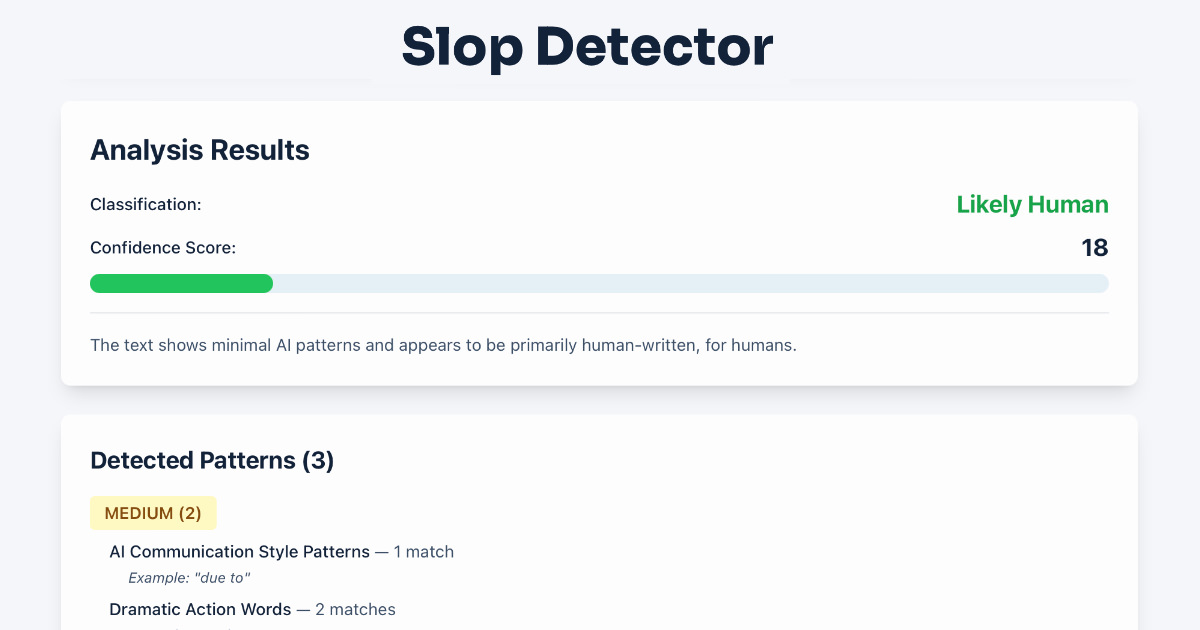

- Tool capabilities: Providing search functionality that AI assistants can call (see screenshot above)

- Resource management: Exposing your blog content as searchable resources

- Protocol compliance: Full JSON-RPC 2.0 support for seamless integration

You can find the complete Cloudflare Worker MCP implementation and deployment configuration in this Cloudflare GitHub repository. This original implementation is a great starting point, querying a public weather API, but I modified it (with Claude Code) to query my blog’s llms-full.txt file instead. The full implementation details (generated by Claude Code) are available in the GitHub repository.

My worker fetches my blog’s llms-full.txt file, parses the structured markdown content, creates a cache, and provides search capabilities that allow AI assistants to find all relevant content based the questions asked (not just a keyword search as available on the site).

Making It Discoverable with .well-known/mcp.json

The final step is making your MCP server discoverable by creating a .well-known/mcp.json file in your Hugo static directory, for example:

{

"version": "1.0",

"servers": [

{

"name": "Halans Blog Searcher",

"description": "MCP server for querying halans.com content",

"endpoint": "https://halans-mcp-server.halans.workers.dev/sse",

"capabilities": ["resources", "tools"]

}

]

}

Add this to your Hugo static/.well-known/ directory so it’s served at (for example) https://halans.com/.well-known/mcp.json.

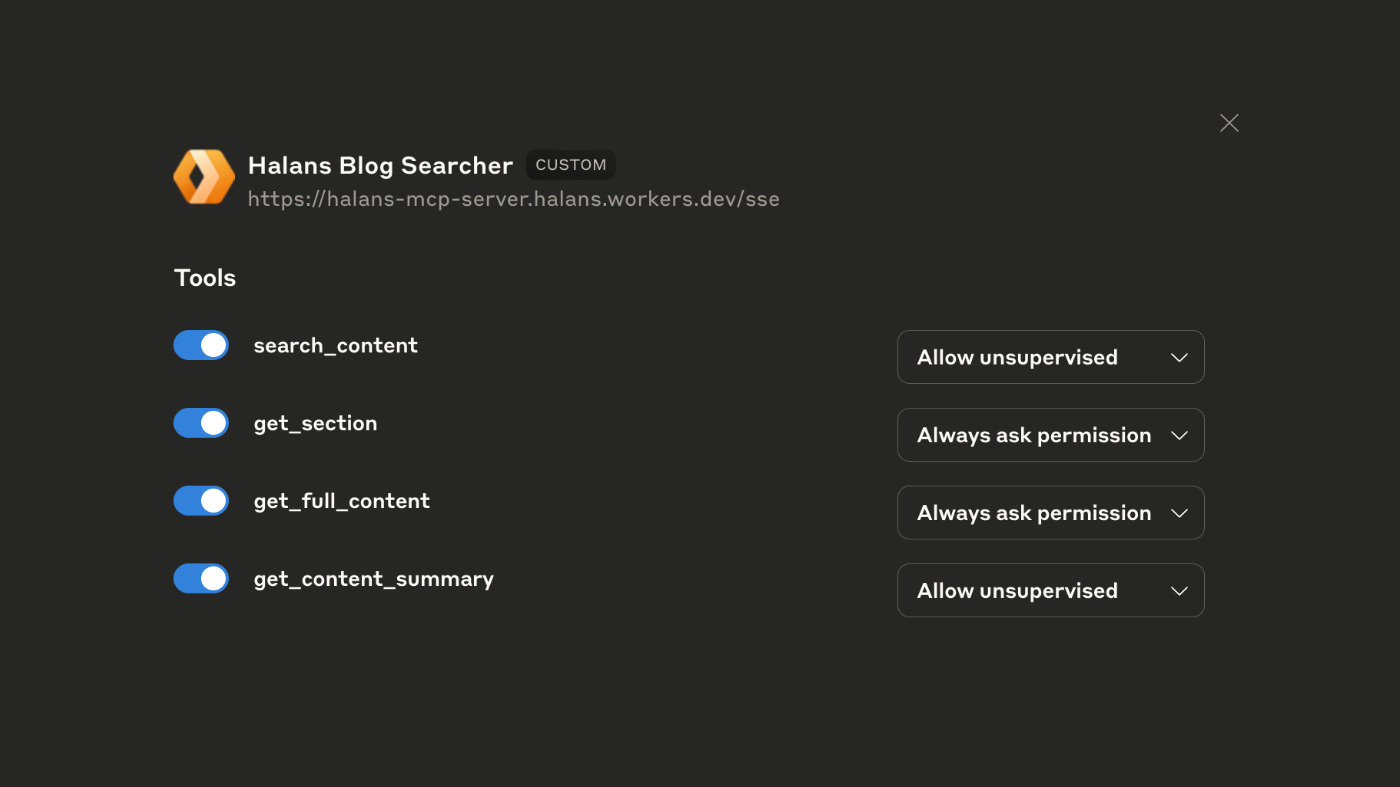

Connecting to Claude Desktop

To use your new MCP server with Claude Desktop:

- Open Claude Desktop preferences

- Add the new custom Connector. My MCP CF Worker: https://halans-mcp-server.halans.workers.dev/sse

But the idea is, if you’ve set up the .well-known discovery, Claude and other tools could automatically detect your MCP server when you mention your domain (but that’s not yet the case as of now).

Accomplishment

With this setup, I now have:

- Automated content indexing: Hugo generates AI-searchable versions of your content on every build

- Fast, global search: Cloudflare Workers provide low-latency access to your content worldwide

- AI integration: Claude (and other MCP clients) can now search through and answer questions (not just keyword searches) referencing your up-to-date blog posts in its answers.

- Standardized protocol: Your implementation follows MCP standards, making it compatible with future tools.

The current llms-full.txt file is 1.4MB, or about 364k tokens (1.200.651 characters). There are other ways to implement an MCP server, such as a vector database, but I wanted to keep it simple and use Cloudflare Workers, which is free for low usage.

Next Steps

Consider extending your MCP server with additional capabilities:

- Content analysis tools: Let AI analyze your writing patterns or suggest related posts

- Draft management: Provide access to unpublished content for editing assistance

- Multi-language support: Handle international content with proper language detection

The Model Context Protocol opens up exciting possibilities for making our content more accessible to AI assistants, if/when/how you want to. By implementing this Hugo + MCP integration, you’ve created a bridge between your static site and the dynamic world of AI-powered agents.

Your blog is no longer just a collection of posts - it’s become a searchable, AI-accessible knowledge base that can actively participate in conversations and problem-solving sessions.

Side-note: I spend about 4-5 hours in total on this integration, including research, implementation using Claude Code, and testing/tweaking. I did have experience setting up the Clouflare MCP Worker before during a Cloudflare workshop.

References

- Model Context Protocol Specification

- Cloudflare Workers Documentation

- Remote MCP Server Guide

- Hugging Face MCP Course

- Adding llms.txt & markdown output to your Hugo site

- LLMs.txt to help LLMs grok your content

- Hugo Documentation

Explore MCP: