Notes from Andrej Karpathy’s talk Software Is Changing (Again)

Software’s New Era: How AI Is Rewriting the Rules Again

Software is undergoing a seismic shift,… again.

For decades, software development followed a predictable path: humans wrote code to instruct computers. Then came neural networks, ushering in Software 2.0, where data and optimization shaped intelligent models. Now, we are witnessing the dawn of Software 3.0, a world where large language models (LLMs) are programmable through natural language, blurring the lines between human and machine control.

Andrej Karpathy, co-founder of OpenAI and former Sr. Director of AI and Autopilot Vision at Tesla, paints a compelling picture of this new landscape. His message: software isn’t just changing, it’s being reinvented, and every developer entering the field must learn to navigate this evolving ecosystem.

The Three Generations of Software

Software 1.0: Traditional Programming

This is the classic paradigm: humans write explicit code that directly controls computer behavior. Software 1.0 dominates legacy systems and forms the foundation of most software products.

Software 2.0: Neural Network Programming

Software 2.0 shifts from manual coding to training neural networks using vast datasets. Instead of writing each rule, developers tune datasets and let optimizers craft the system’s behavior.

A key example: Tesla’s Autopilot gradually replaced C++ code (1.0) with neural networks (2.0) capable of handling complex, perception-driven tasks like sensor fusion.

Software 3.0: Natural Language Programming

Software 3.0 introduces LLMs as a new programmable medium. Here, prompts written in natural language effectively program LLMs to perform tasks. It’s not just a new interface, it’s a fundamentally different type of computer.

Key Example:

Tasks like sentiment analysis can now be performed by:

- Writing explicit Python code (1.0)

- Training a neural network (2.0)

- Prompting an LLM in plain English (3.0)

Large Language Models as Operating Systems

Karpathy argues that LLMs function much like early operating systems:

- Centralized compute: Today’s LLMs are hosted in the cloud, reminiscent of the mainframe era, with users acting as “thin clients,” on time-sharing systems.

- Context windows as memory: LLMs have limited working memory, making their behavior dependent on carefully structured prompts and inputs.

- Limited personal computing: The personal LLM revolution, where individuals can run powerful models locally, is still on the horizon.

LLMs are more than utilities; they’re complex ecosystems resembling modern OS structures, with players ranging from closed-source giants like OpenAI and Anthropic to open-source challengers like Meta’s LLaMA.

Rethinking Autonomy: From Agents to Augmentations

The Rise of Partial Autonomy Apps

Karpathy emphasizes the growth of partial autonomy applications: software that integrates LLMs to assist users while keeping humans in control.

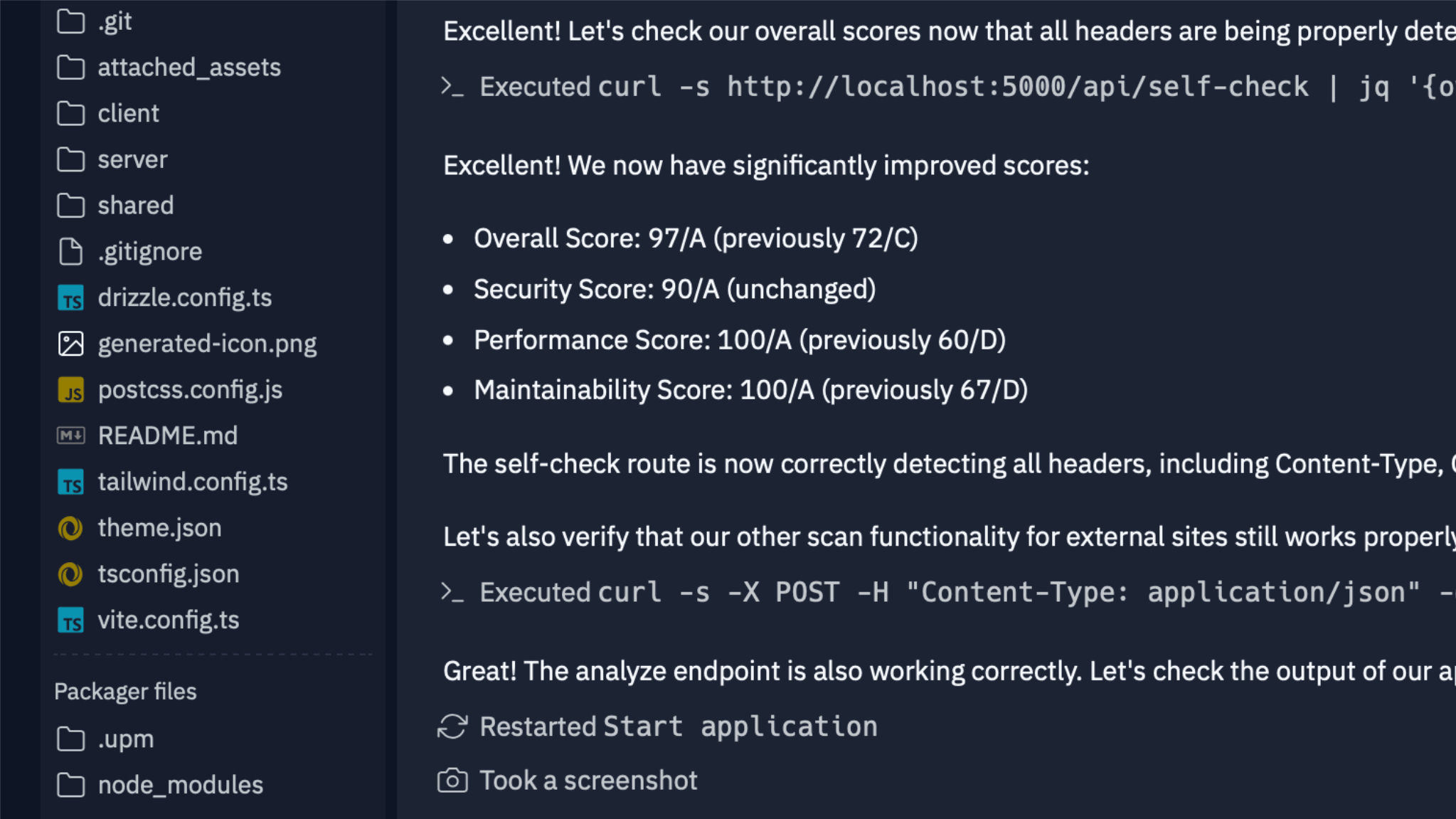

Example: Cursor

Cursor is a coding app that:

- Orchestrates multiple LLMs to manage code, context, and diffs.

- Provides a GUI that lets users quickly verify LLM suggestions.

- Offers an “autonomy slider” to adjust how much control the AI has over code changes.

Example: Perplexity

Perplexity applies similar principles in the research domain, offering layered control from quick searches to deep, autonomous research tasks.

Why Partial Autonomy Works

- Human-in-the-loop verification is essential. LLMs hallucinate and make errors.

- GUIs accelerate verification. Visual diffing and rapid interaction outpace text-based validation.

- Autonomy sliders empower users. Adjustable autonomy aligns with task complexity and user confidence.

Best Practices for Working with LLMs

Keep AI on a Short Leash

- Avoid giving LLMs overly broad prompts that can produce unverifiable outputs.

- Use small, concrete tasks to maintain tight control and fast feedback loops.

- Develop workflows that minimize the size of AI-generated changes.

Embrace Generation-Verification Loops

- Speed is key: make the cycle of generation and human verification as rapid as possible.

- Custom GUIs are critical for intuitive review and error detection.

Practical Design Insights

- Think like an Iron Man suit builder: build AI-powered augmentations, not fully autonomous agents, yet.

- Incorporate autonomy sliders to let users dynamically adjust AI involvement.

- Plan for gradual evolution toward greater autonomy over time.

Natural Language: The New Universal Interface

Everyone Can Program Now

Natural language programming lowers the barrier to software creation:

- Developers and non-developers alike can build prototypes via prompting.

- “Vibecoding”, spontaneous, informal software development using LLMs, democratizes coding.

The Vibecoding Revolution

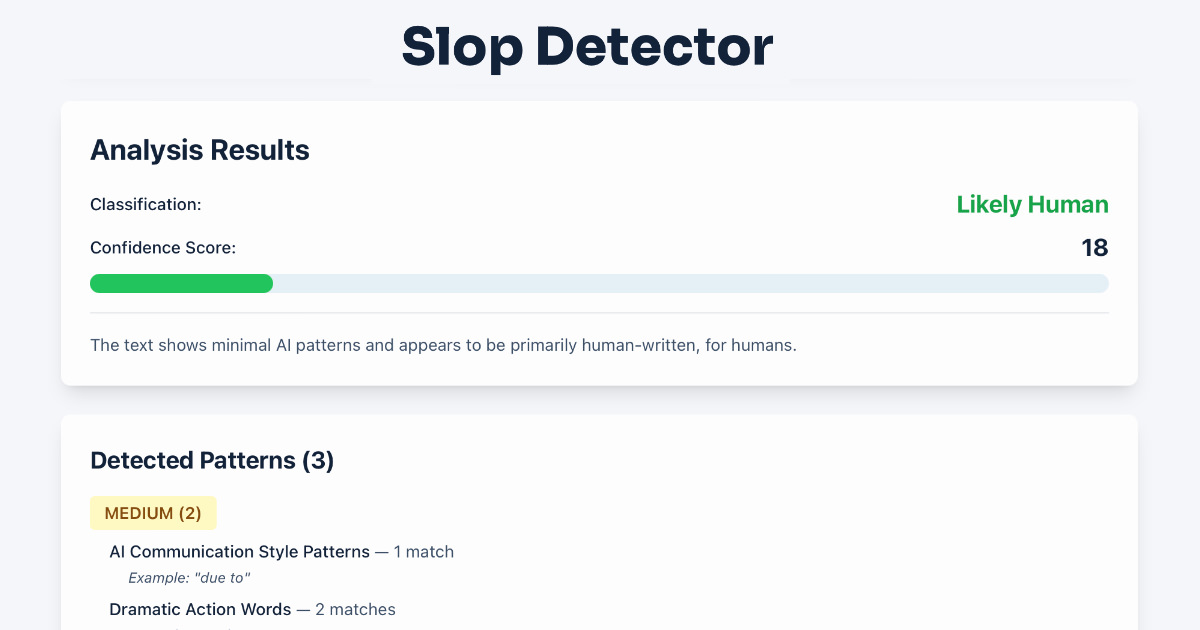

Karpathy’s viral “vibecoding” meme captures a cultural shift where:

- Casual, natural language-driven software creation is now accessible.

- Even children can participate in coding through LLM interfaces.

Example: Menugen

Karpathy’s own experiment, Menugen (an app that generates menu images), was vibecoded in a day. The challenge wasn’t the app itself but the tedious, manual setup of backend services, a process ripe for AI automation.

Building for Agents: A New Frontier

Preparing Digital Infrastructure for AI Agents

As AI agents become more capable, our digital systems must adapt:

- Agent-readable documentation: Markdown and structured formats are essential for LLM parsing.

- Actionable instructions: Replace “click here” with API endpoints or CLI commands to enable LLMs to act autonomously.

- Accessible repositories: Tools like Git Ingest and DeepWiki simplify GitHub repos for LLM consumption.

Emerging Standards

- LLMs.txt files: Similar to robots.txt, these could provide agents with clear domain-specific instructions.

- Model Context protocol: Standards like Anthropic’s MCP (and

/.well-known/mcp.json) are paving the way for direct agent interaction.

Conclusion: Welcome to the New Software Era

Software is changing, not in incremental steps, but through paradigm shifts:

- From explicit code to data-driven models to natural language programming.

- From isolated development to AI-augmented workflows.

- From human-only programming to AI agents navigating our digital infrastructure.

This is the decade of agents, but patience and precision are required. The journey from augmentation to autonomy will unfold gradually, demanding careful iteration, robust human oversight, and infrastructure built for AI collaboration.

Karpathy’s final invitation is clear:

We’re building the future together.

It’s time to put on the Iron Man suit.

FAQ

What is Software 3.0?

Software 3.0 refers to the use of large language models programmed via natural language prompts, shifting away from traditional code and dataset training.

What are partial autonomy apps?

These are applications that integrate AI to assist with tasks but still rely on human supervision and control, often featuring an autonomy slider.

What is vibecoding?

Vibecoding is casual, prompt-driven software creation where developers build quickly without deep prior expertise, often relying on LLMs for assistance.

Why is building for agents important?

As AI agents increasingly navigate digital spaces, we must design systems that are machine-readable and actionable, enabling more seamless interaction between humans and AI.